How I Crammed So Many Services Onto My Domain's Lightsail Box

I’ve got a single Lightsail instance, and somehow it’s serving my Jekyll blog, FoundryVTT game server, Netdata dashboard, and a few other odds and ends—all behind proper TLS with reverse proxies, authentication where I need it, and without breaking the bank… and I’m still adding.

This post is a quick walkthrough of how I did it, what tools I used, and how it hasn’t caught fire. Yet.

The Setup

- Host: AWS Lightsail (2 vCPUs / 4 GB RAM / 80 GB SSD) (~24$ a month)

- OS: Ubuntu

- Domains:

jck.sh,dnd.jck.sh,monitoring.jck.sh - Main services:

- Jekyll blog (

jck.sh) - FoundryVTT (

dnd.jck.sh) - Netdata (

monitoring.jck.sh)

- Jekyll blog (

- Supporting Services:

- Caddy

- Docker

- Github Actions Runner

Why Lightsail?

Yeah, there are cheaper services. But with Lightsail, I’m already inside the AWS ecosystem, so if I want to start doing other things (like S3 buckets, Lambda functions, or messing with Route 53), I don’t have to worry about egress fees or cross-cloud jank.

Could I go cheaper? Absolutely. I could self-host from home on a business fiber line with a static IP for ~$15/month. But then I’d have to manage hardware, deal with power bills, and expose myself to more security headaches.

Sure, there are other VPS providers that charge less—but Lightsail hits a sweet spot of simplicity, power, and decent integration with other AWS services.

Reverse Proxy: Caddy

I use Caddy as my reverse proxy to handle HTTPS and route traffic between my domain and subdomains. It’s probably the most underrated part of my setup. Caddy just works. No cron jobs. No certbot scripts. It automatically fetches and renews TLS certificates from Let’s Encrypt, and the config is dead simple.

Normally I would run Caddy in Docker, but I chose to install it directly on the host to avoid Docker networking headaches. This way, I can spin up any containerized service and immediately reverse proxy it without messing with docker network aliases or custom bridge setups. Just point Caddy at localhost and it sees everything.

Here’s a look at part of my actual Caddyfile:

{

email [email protected]

acme_ca https://acme-v02.api.letsencrypt.org/directory

}

dnd.jck.sh {

reverse_proxy localhost:30000

tls {

protocols tls1.2 tls1.3

}

}

jck.sh {

reverse_proxy {

to localhost:3000 localhost:3001

lb_policy first

health_uri /health

}

tls {

protocols tls1.2 tls1.3

}

}

monitoring.jck.sh {

reverse_proxy localhost:19999

tls {

protocols tls1.2 tls1.3

}

basicauth {

admin [hashed-password]

}

header {

Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"

}

}

Monitoring and Alerting

Netdata is a decent lightweight alternative to the other monitoring solutions out there. You can run both your collector and dashboard on the same host which is very nice for this use case. Yes.. I realize having all of my monitoring on the host I am monitoring is.. not that smart but its a lightsail box with foundry sooooo.

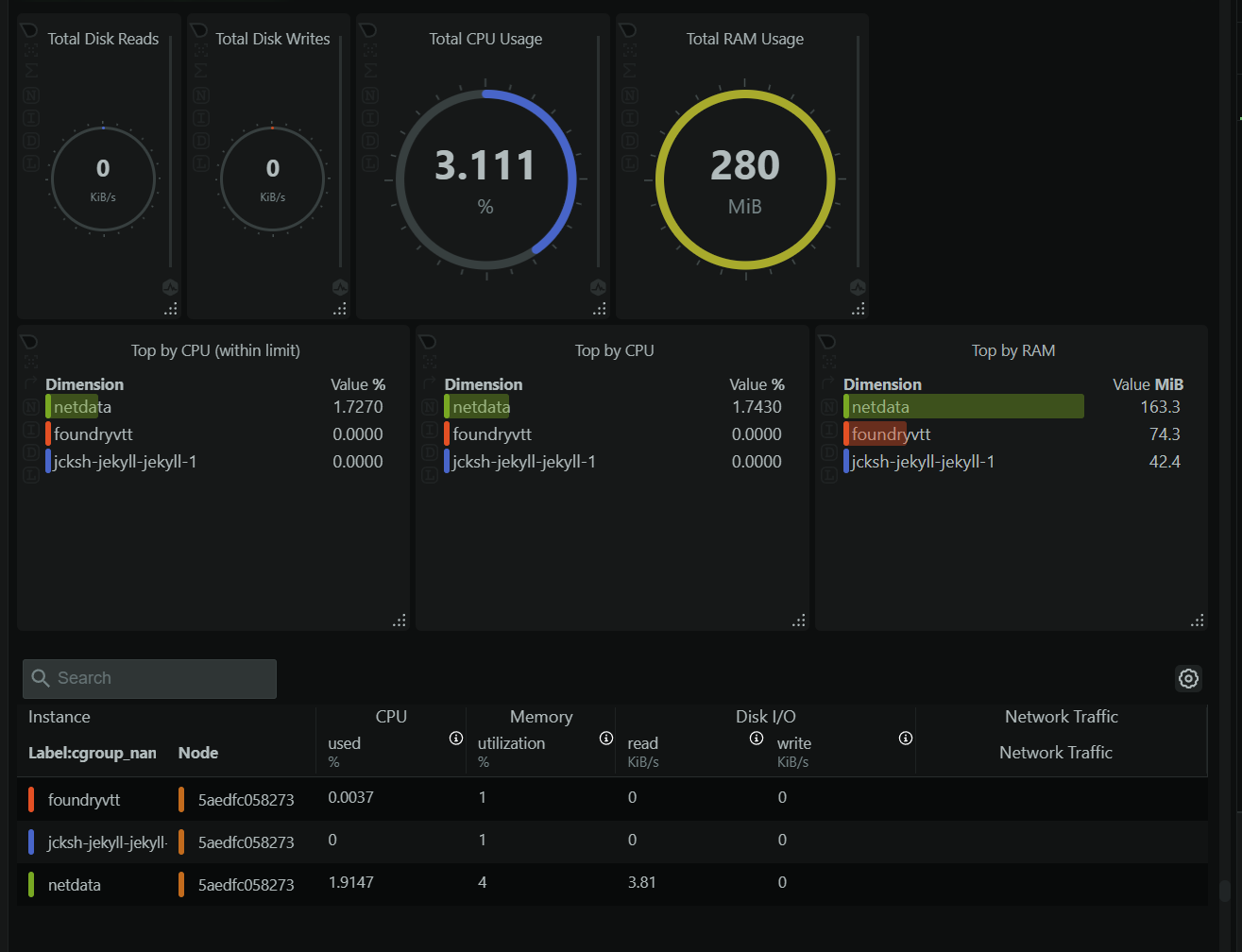

I Pipe my alerts from netdata to my Discord server. Sick. No pager duty. If I start to blow up my lightsail box when doing things. I’ll know. Doing so is fairly straight forward. All of my services are dockerized so all I have to do it mount /var/run/docker.sock to my netdata container and it picks all of them up.

As you can see these services have a very small footprint when not being used. Netdata is really only spiking since I was on the dashboard. FoundryVTT only gets use once a week (dnd night yeah boiii) but when its getting use it does spike quite a bit sinces it not exactly light weight. My instance is honestly probably too big.. but hey maybe one day I want to run a small minecraft server. I need to keep my options open.

Overengineered Docker Setup

If you looked closely at my Caddyfile, you’ll notice it points to two local ports for the Jekyll site. That’s intentional.

When I push updates to the blog’s GitHub repo, a GitHub Action kicks off: it rebuilds the Docker image, spins up a second Jekyll container, lets Caddy load balance between the two, then shuts the old one down. Zero downtime.

Is it overkill? Absolutely. But it works—like a poor man’s Kubernetes: just enough automation to feel slick, without the overhead of managing an actual Kubernetes cluster.

I bundled my FoundryVTT server and Netdata config into a single infrastructure repo, since they don’t need their own. Each new service I add will get its own docker-compose.yml file. The only real requirement is that it runs on a unique port.

Backups

I don’t care about anything on this host whatsoever. Everything that is important is in source control EXCEPT!!!! my foundryvtt data. That stuff needs to be backed up off that host regularly. Foundryvtt itself backs up itself regularly but its backed up onto my host.

So simple backup script to s3:

set -e

BACKUP_NAME="foundry_backup_$(date +%F_%H-%M-%S).tar.gz"

BACKUP_DIR="/home/ubuntu/backups"

BACKUP_PATH="$BACKUP_DIR/$BACKUP_NAME"

mkdir -p "$BACKUP_DIR"

# Create tarball from Docker volume

docker run --rm \

-v services_foundry_data:/volume \

-v "$BACKUP_DIR":/backup \

alpine \

tar czf "/backup/$BACKUP_NAME" -C /volume .

# Upload to S3 with explicit region

aws s3 cp "$BACKUP_PATH" "s3://jcksh-foundryvtt-backups/backups/$(date +%F)/$BACKUP_NAME" \

--region us-east-1 \

--profile foundry-backup

# Remove local backup after upload

rm -f "$BACKUP_PATH"

Followed by a once a month cron. Keeps s3 costs down (already neglible) and I can just run it whenever I want.

Things That Could Be Taken Further

Right now, I manage all of my secrets using .env files. It’s simple, portable, and works well enough for a one-man setup. I like to operate under the assumption that this environment could disappear at any moment, so being able to spin everything up somewhere else quickly is a core part of the design.

Could I use something more robust like AWS Secrets Manager or HashiCorp Vault? Sure. But honestly, that’s overkill for what I’m doing. More complexity, more moving parts, more ways for something to break. .env files keep it lean and low-maintenance.